31 Jan 2024

Deep Reinforcement Learning 02 (Imitation Learning)

Deep Reinforcement Learning 02 (Imitation Learning)

Keypoints:

- Imitation Learning

- Decision Making

- Cost Function

- What makes behavior cloning easy and what makes it hard?

- Why might we fail to fit the expert?

- Methods to solve the problems

- Shortcomings of imitation learning

Decision Making

- Supervised Learning: Fully Observation vs Partial Observation

-

The supervised learning learns the features and the labels, then produces a distribution, i.e the probability of the actions. The distribution could be discrete or continu. $\pi_{\theta}(a_t \vert o_t)$ is the probability of taking action $a_t$ given the observation $o_t$.

-

Sometimes, it’s $\pi_{\theta}(a_t \vert s_t)$, where $s_t$ is the state. This is called fully observation. When the observation is not reliable, it’s partial observation and state can reflect the environment better.

-

Markov Property State $s_{t+1}$ is only dependent on the state $s_t$ and the action $a_t$. The state $s_t$ is the summary of the history of the environment. The $s_{t-1}$ doesn’t influence the $s_{t+1}$ when given $s_t$.

-

IID: Independent and Identically Distributed

- The data is independent and identically distributed. The data is independent of each other and the distribution is the same.

- This is very important and necessary for the supervised learning. Otherwise, the model can’t generalize well.

This image shows the error accumulated in the last time instant will change the state in the next time instant. The state is not independent and identically distributed, i.e. supervised learning can’t be applied.

Cost Function

For $0$ or $1$ loss: \(c(s,a) = \begin{cases} 0 & \text{if } \pi_{\theta}(a \vert s) = a \\ 1 & \text{otherwise} \end{cases}\)

For log probability loss: \(r(s,a) = \log p(a=\pi^*(s) \vert s)\)

What makes behavior cloning easy and what makes it hard?

-

Intentionally add mistakes and corrections: More error in dataset will lead to more better performance in the model. Because the agent will probably make error and it will work better if there are more errors to learn from to help it recover in the dataset. So, the mistakes hurt but the corrections help, and often more than the mistakes hurt.

-

Data augmentation: Add some $\underline{fake}$ data to the dataset.Then add some $\underline{corrections}$ to the fake data. This will help the model to learn from the mistakes and corrections.

Why might we fail to fit the expert?

- Non-Markovian behavior: behavior that is not Markovian, i.e. the action is not only dependent on the state at the current state, but also the previous states. This is hard to fit because the model can’t generalize well.Human are often non-Markovian.

- Methods: LSTM, Transformer, etc.

- Multi-modal behavior: The expert has multiple behaviors. More than one action lead to good results. For continuous action distribution, the learned distribution may not fit any of the expert’s actions.

- Methods: Mixture of Gaussians, latent variable models, diffusion models, etc.

- Mixture of Gaussians: a set of Gaussian distributions, each with its own mean and variance, and a scalar weight to balance these distributions.

- Latent variable models: a random seed is used to choose the distribution to sample from. Also to make the random number useful in training, like auto-encoder.

- Diffusion models: add noise to the data, and then remove the noise. The learning is to learn the noise.

- Discretization: Discretize the continuous action space.Especially good for 1d action.

Methods to solve the problems

- Multi-tasks training: Compared with single goal $\pi_{\theta}(a \vert s)$, multi-tasks training is to learn $\pi_{\theta}(a \vert s,g)$, where $g$ is the goal

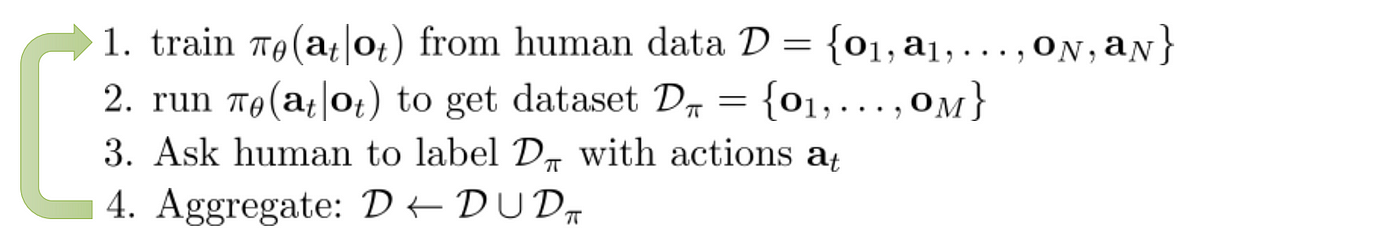

- DAgger: Dataset Aggregation. The agent is trained on the dataset, and then the agent is used to collect the data. Instead of make $p_{\pi_{\theta}}(o_t) = p_(o_t)$, we make $p_(o_t) = p_{\pi_{\theta}}(o_t)$. It will make agent learning converges better to the dataset.

DAgger: Step 3 is hard to achieve.

Shortcomings of imitation learning

- Humans need to provide the data: The data is finite and the model can’t generalize well while deep learning works well with large dataset.

- Humans are not good at providing some kinds of data: For example, the data is not Markovian, multi-modal, etc.